Transforming Free TrueNAS or Debian Distribution into a 2‑Node High‑Availability System with floating IP (2025 Edition)

Introduction

In today's data‑driven environment, uninterrupted access to storage is critical. This guide details converting the free version of TrueNAS—or any APT/Debian‑based system—into a robust two‑node high‑availability (HA) setup with shared, distributed, redundant storage. We'll walk you through re‑enabling package management (using apt) for custom installations, configuring synchronous replication with DRBD, and managing Failover with Pacemaker/Corosync. We'll also incorporate best practices from real‑world ZFS cluster quickstarts and high‑performance clustering projects, including configuring a floating IP. Hence, clients always connect to the active node.

1. Hardware, Network & Prerequisites

Hardware Requirements

- Two Identical TrueNAS Nodes: Ensure both nodes have identical hardware, including redundant network interfaces. (You may enable bonding across replication interfaces using IEEE 802.3ad/LACP.)

- Shared Storage Devices: Local disks or JBOD enclosures set up for redundancy.

- Dedicated Management Network: Separate heartbeat and replication traffic networks to improve performance and security.

Software Requirements

- TrueNAS (Free Edition) (or a Debian/apt‑based distro)

- DRBD: For block‑level synchronous replication.

- Pacemaker & Corosync: For cluster management and resource failover.

- APT (or Alternate Package Manager): Install additional software and custom tools.

- ZFS: Using features like snapshots and self‑healing to manage your storage pool.

Insight: A high‑performance cluster benefits from having a clear separation between client, replication, and management networks. This ensures minimal interference during Failover and heavy workloads.

1.1 Limitations

2. Installing TrueNAS and Basic Configuration

- Install TrueNAS on both nodes using the standard installer.

- Assign static IP addresses to each node for management and replication.

- Verify Connectivity over the dedicated management network.

- Set both nodes' System Settings (time synchronisation, NTP, etc.) for consistency.

3. Re‑Enabling APT and Preserving Custom Settings

TrueNAS often disables the underlying package manager. To add extra software and maintain these settings during system upgrades, follow these steps:

3.1 Enable the Package Manager

Access the shell (via SSH or console) and run:

sudo system-service update --enable-apt

(Verify with your version's documentation if this command differs.)

3.2 Update and Upgrade Packages

sudo apt update && sudo apt upgrade -y

3.3 Ensure Configuration Persistence

Store custom configuration files in a persistent directory and use symlinks:

sudo mkdir -p /usr/local/etc/apt-custom

sudo cp -r /etc/apt/* /usr/local/etc/apt-custom/

sudo ln -sf /usr/local/etc/apt-custom /etc/apt

Create an automated backup script:

echo "sudo cp -r /etc/apt /etc/apt.backup" | sudo tee /usr/local/bin/backup-apt.sh

sudo chmod +x /usr/local/bin/backup-apt.sh

Insight: Keeping your package configurations outside the system's default directories ensures that system upgrades won't overwrite your custom settings.

4. Configuring DRBD for Synchronous Block Replication

DRBD mirrors storage in real time between nodes, ensuring data consistency.

4.1 Install DRBD

sudo apt install drbd-utils -y

4.2 Create the DRBD Resource File

Create a file (e.g. /etc/drbd.d/truenas.res) with the following content:

resource truenas {

protocol C;

on node1 {

device /dev/drbd0;

disk /dev/sda3;

address 192.168.1.101:7788;

meta-disk internal;

}

on node2 {

device /dev/drbd0;

disk /dev/sda3;

address 192.168.1.102:7788;

meta-disk internal;

}

}

4.3 Initialise and Start DRBD

On both nodes:

sudo drbdadm create-md truenas

sudo drbdadm up truenas

On the primary node (node1), force it into primary mode:

sudo drbdadm primary --force truenas

Enhancement Tip: Always validate DRBD status after initialisation:

sudo drbdadm status truenas

This confirms that the nodes are correctly synchronised before proceeding.

5. Setting Up Pacemaker for Cluster Management

Pacemaker (in tandem with Corosync) handles resource monitoring and orchestrates Failover.

5.1 Install Pacemaker and Corosync

sudo apt install pacemaker corosync -y

5.2 Cluster Authentication and Setup

Authenticate nodes and create the cluster:

sudo pcs cluster auth node1 node2 -u hacluster -p yourpassword

sudo pcs cluster setup --name truenas-ha node1 node2

sudo pcs cluster start --all

5.3 Define Cluster Resources

5.3.1 DRBD Resource Configuration

sudo pcs resource create drbd_truenas ocf:linbit:drbd op monitor interval=30s

sudo pcs resource master master_drbd drbd_truenas master-max=1 clone-max=2 notify=true

5.3.2 ZFS Resource Integration

After configuring DRBD, set up your ZFS pool on top of the replicated block device:

sudo zpool create truenas_pool mirror /dev/drbd0 /dev/drbd1

sudo zfs create truenas_pool/ha_data

Define a cluster resource to monitor ZFS status (or integrate it into a custom resource script). For example:

sudo pcs resource create zfs_pool systemd:zfs-import-cache op monitor interval=30s

5.3.3 Additional Services

If you're running containerised apps or object storage, add them as Pacemaker resources. For example:

sudo pcs resource create zfs_pool systemd:zfs-import-cache op monitor interval=30s

Enhancement Tip: Fencing and resource constraints are essential in high‑performance clusters. If possible, configure fencing devices so a failed node is reliably isolated during Failover.

6. Adding a Floating IP (VIP)

A floating IP ensures clients only need one IP address to access the HA system. Whichever node is active at a given time will automatically host this IP.

6.1 Create a Floating IP Resource

Use Pacemaker's built‑in IPaddr2 resource agent (provided by the ocf:heartbeat suite). Example:

sudo pcs resource create cluster_vip ocf:heartbeat:IPaddr2 \

ip=192.168.1.200 cidr_netmask=24 \

op monitor interval=30s

- ip: The floating IP address (e.g.

192.168.1.200). - cidr_netmask: Your network's subnet mask in CIDR notation (e.g.

24for a/24).

6.2 Constrain the Floating IP to the Active Node

You generally want the floating IP to run on whichever node is the DRBD primary (and/or hosting the ZFS pool). Configure ordering and colocation constraints:

sudo pcs constraint colocation add cluster_vip with master_drbd INFINITY

sudo pcs constraint order start master_drbd then cluster_vip

(If ZFS is also a factor, you might order the VIP after the ZFS resource or co-locate it with whichever resource is essential.)

6.3 Verify the Floating IP

Once the resource is created and constraints are set, Pacemaker will assign the floating IP to the correct node. Confirm with:

sudo pcs status

ip a

You should see 192.168.1.200 (or your chosen IP) bound to the active node's interface.

Usage: Configure clients (e.g., NFS, SMB, iSCSI initiators) to connect to this floating IP. If the primary node fails, Pacemaker will automatically move the VIP (and associated services) to the surviving node.

7. Tuning and Advanced Configuration

7.1 Network and Fencing

- Separate Networks: Use dedicated channels for replication, management, and client traffic.

- Fencing: Configure IPMI-based fencing to cleanly power‑cycle failed nodes. Example:

sudo pcs stonith create fence1 fence_ipmilan \

pcmk_host_list="node1 node2" \

ipaddr="192.168.1.200" \

login="admin" passwd="password" \

lanplus="1" op monitor interval=60s

7.2 Cluster Resource Constraints

Add ordering and colocation constraints to ensure the correct start/stop sequence for resources. For example:

sudo pcs constraint order start master_drbd then zfs_pool

sudo pcs constraint colocation add zfs_pool with master_drbd INFINITY

Tip: These constraints help avoid race conditions, ensuring the ZFS pool only mounts after DRBD is in primary mode.

8. Testing and Validation

8.1 Verify Cluster Health

Check the overall cluster status:

sudo pcs status

8.2 Simulate Failover

Disconnect the network or power of one node and verify:

- DRBD promotes the secondary node.

- ZFS mounts on the new primary.

- The floating IP moves to the new primary node.

- Additional services restart seamlessly.

8.3 Monitor Logs and Synchronisation

Use:

sudo drbdadm status truenas

sudo journalctl -u pacemaker

To confirm all components are functioning correctly.

9. Warning

Why a 2-Node HA Active/Passive Quorum is Invalid while functional

- Split-Brain Scenario – If the two nodes lose communication with each other (network failure), both may assume they should be the active node. This can lead to data corruption or service inconsistencies because there is no way to determine which node should be in control.

- No Majority Quorum – In quorum-based systems, a majority of nodes must agree on which one is active. A two-node cluster means that a single-node failure results in no majority (1 out of 2 = 50%, not >50%). Without a third-party tie-breaker, neither node can safely take over.

- Failover Issues – If the active node fails, the passive node does not have an external mechanism to determine if it should become active without risking a split brain.

- No Tie-Breaking Mechanism – With an odd number of nodes (3 or more), a quorum can always be reached, ensuring that failover decisions are correctly made.

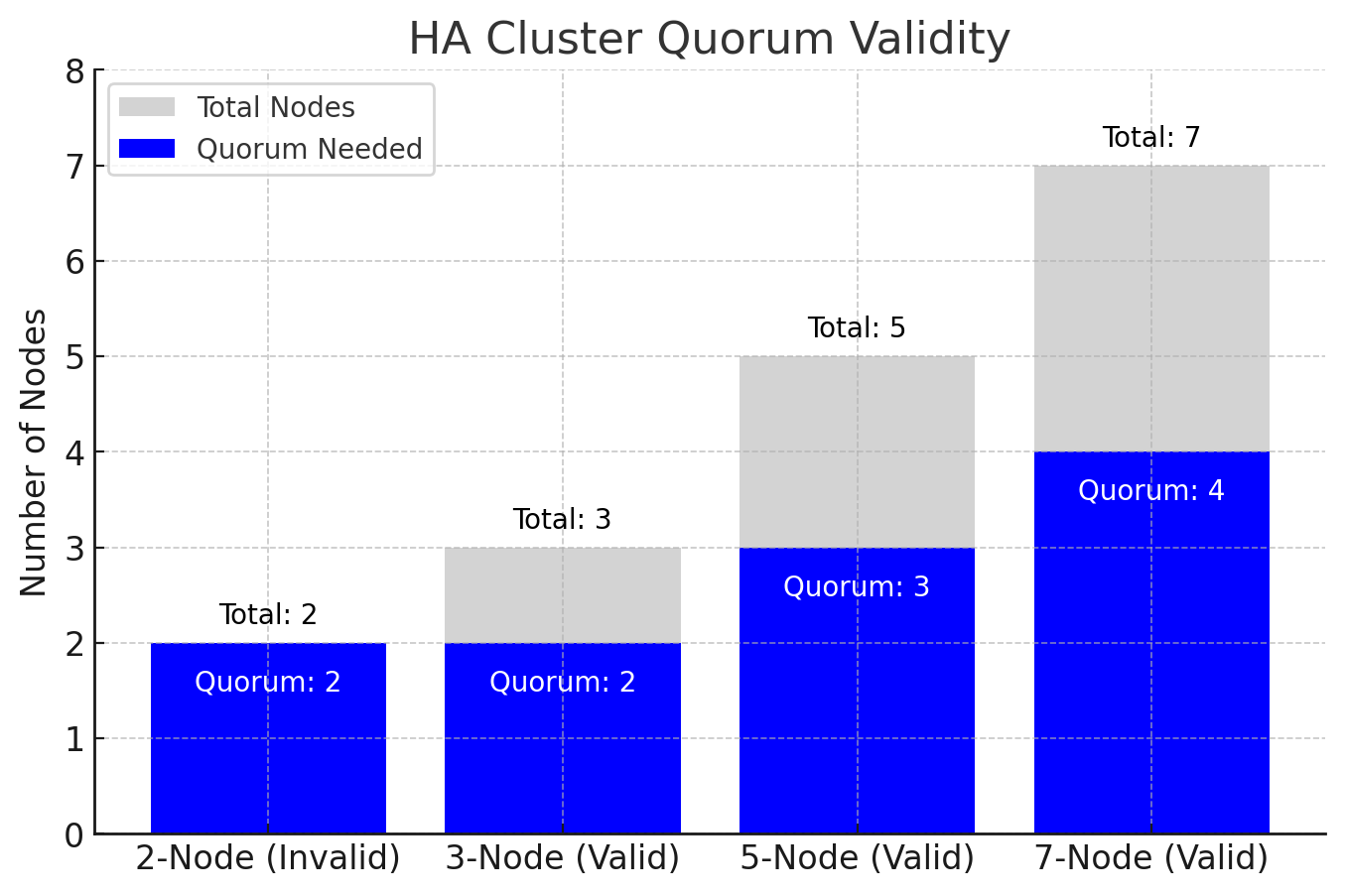

What is a Valid Number of Nodes?

A minimum of 3 nodes is required for a proper quorum in an HA cluster. This allows for a majority vote:

- 3-node cluster: The majority is 2 out of 3 nodes.

- 5-node cluster: The majority is 3 out of 5 nodes.

- 7-node cluster: The majority is 4 out of 7 nodes.

- Odd numbers ensure a decision can always be reached.

In cases where only two nodes are available, a tie-breaker mechanism such as an external quorum device (witness node or quorum disk) is necessary.

Chart: Valid and Invalid Quorum Configurations

The chart above illustrates why a 2-node HA quorum is invalid compared to valid configurations with 3, 5, and 7 nodes.

- 2-Node (Invalid): Needs both nodes for quorum (100%), meaning a failure causes loss of quorum.

- 3-Node (Valid): Requires two nodes for quorum (majority), allowing one node to fail.

- 5-Node (Valid): Requires three nodes for quorum, further enhancing redundancy.

- 7-Node (Valid): Requires four nodes for the quorum, offering the highest resilience.

10. Final Thoughts

Following these detailed steps, you've transformed a free TrueNAS (or Debian‑based) installation into a fully operational two‑node HA system. With a floating IP, clients only need to connect to one address, ensuring uninterrupted access even if a node fails.

Key Takeaways:

- Persistent Package Management: Custom apt configurations preserve your custom software through upgrades.

- Synchronous Replication: DRBD ensures data is always mirrored across nodes.

- Coordinated Failover: Pacemaker and Corosync maintain resource order and integrity during node failures.

- Floating IP: A single IP address automatically moves to the active node, simplifying client connections.

- Advanced Tuning: Network separation, fencing, and resource constraints elevate the cluster's robustness.

For HA quorum to work correctly, always use an odd number of nodes (≥3) or add a tie-breaker mechanism in a 2-node setup.

Deploy this solution in your environment and enjoy the benefits of a resilient, high‑performance storage system built for the demands 2025 and beyond.

Happy clustering!