Sentiment Detection for LLMs: A Technical Deep Dive with Python

Introduction

Sentiment analysis is crucial in natural language processing (NLP), helping LLMs understand the tone and emotion behind text. Whether for chatbots, AI assistants, or content moderation, sentiment detection enables models to classify text as positive, neutral, or negative.

In this technical deep dive, we'll explore:

- Different approaches for sentiment detection

- Implementing sentiment analysis using Python

- Fine-tuning LLMs for better sentiment detection

- Comparing lexicon-based vs ML-based vs LLM-powered approaches

1. Approaches to Sentiment Detection

1.1 Lexicon-Based Sentiment Detection

- Uses predefined word lists (e.g., VADER, TextBlob) with sentiment scores.

- It is fast and explainable but lacks contextual understanding.

1.2 Machine Learning-Based Sentiment Detection

- Uses ML models like Naïve Bayes, Logistic Regression, and SVMs trained on labeled data.

- It has better Accuracy than lexicon-based methods but requires training data.

1.3 LLM-Powered Sentiment Analysis

- Uses transformer-based models like BERT, GPT, and RoBERTa to understand the sentiment in context.

- High Accuracy but computationally expensive.

2. Implementing Sentiment Detection in Python

We will implement sentiment detection using the following:

- VADER (Lexicon-Based)

- TextBlob (Lexicon-Based)

- Hugging Face Transformers (BERT-Based LLM)

2.1 Installing Dependencies

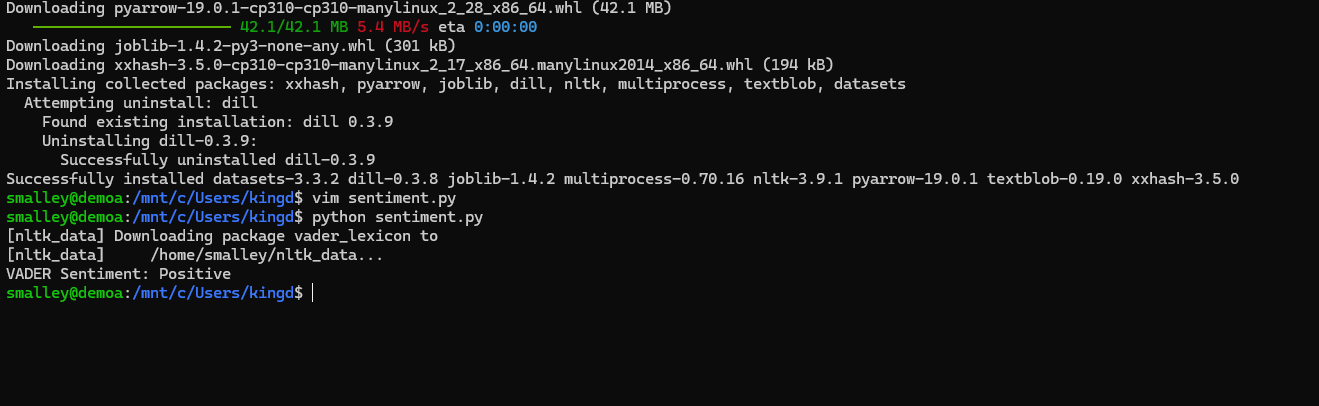

pip install --user nltk textblob transformers torch datasets2.2 Lexicon-Based Sentiment Analysis (VADER)

import nltk

from nltk.sentiment import SentimentIntensityAnalyzer

nltk.download('vader_lexicon')

sia = SentimentIntensityAnalyzer()

def analyze_sentiment_vader(text):

score = sia.polarity_scores(text)

if score['compound'] >= 0.05:

return "Positive"

elif score['compound'] <= -0.05:

return "Negative"

else:

return "Neutral"

text = "I love using AI for sentiment analysis!"

print(f"VADER Sentiment: {analyze_sentiment_vader(text)}")

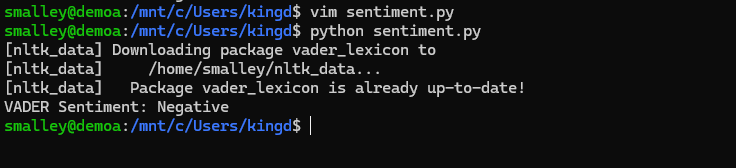

Change the text =

text = "I am so frustrated using AI for sentiment analysis!"

2.3 Lexicon-Based Sentiment Analysis (TextBlob)

from textblob import TextBlob

text = "I love using AI for sentiment analysis!"

def analyze_sentiment_textblob(text):

score = TextBlob(text).sentiment.polarity

return "Positive" if score > 0 else "Negative" if score < 0 else "Neutral"

print(f"TextBlob Sentiment: {analyze_sentiment_textblob(text)}")2.4 LLM-Based Sentiment Analysis (BERT)

from transformers import pipeline

sentiment_pipeline = pipeline("sentiment-analysis")

def analyze_sentiment_bert(text):

result = sentiment_pipeline(text)

return result[0]['label']

print(f"BERT Sentiment: {analyze_sentiment_bert(text)}")3. Fine-Tuning an LLM for Sentiment Detection

Instead of using a pre-trained model, we can fine-tune BERT on a sentiment dataset for better results.

3.1 Loading a Dataset

from datasets import load_dataset

dataset = load_dataset("imdb")

print(dataset["train"][0])3.2 Fine-Tuning BERT

from transformers import AutoModelForSequenceClassification, TrainingArguments, Trainer

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("bert-base-uncased")

model = AutoModelForSequenceClassification.from_pretrained("bert-base-uncased", num_labels=2)Training the model:

training_args = TrainingArguments(

output_dir="./results",

evaluation_strategy="epoch",

save_strategy="epoch",

per_device_train_batch_size=8,

per_device_eval_batch_size=8,

num_train_epochs=3,

weight_decay=0.01,

)

trainer = Trainer(

model=model,

args=training_args,

train_dataset=dataset["train"],

eval_dataset=dataset["test"],

)

trainer.train()4. Comparing Sentiment Detection Methods

| Method | Accuracy | Context Understanding | Speed |

|---|---|---|---|

| VADER | Medium | Low | Fast |

| TextBlob | Medium | Low | Fast |

| Pre-trained BERT | High | High | Medium |

| Fine-Tuned BERT | Very High | Very High | Slower |

When to Use What?

- VADER/TextBlob → Fast analysis for short social media posts.

- Pre-trained BERT → Good for general sentiment analysis.

- Fine-Tuned BERT → Best for enterprise applications requiring high Accuracy.

5. Optimizing Sentiment Detection for LLMs

5.1 Handling Long Conversations

- Split long texts into chunks and analyze sentiment per segment.

- Use rolling sentiment analysis to track shifts in tone.

5.2 Improving Accuracy

- Fine-tune a transformer model on domain-specific sentiment data.

- Use zero-shot classification if predefined sentiment categories are needed.

5.3 Reducing Computational Costs

- Use distilled models (like distilBERT) for faster inference.

- Cache sentiment responses for frequently analyzed text.

6. Conclusion

Sentiment detection is essential for chatbots, social media monitoring, and content moderation. While lexicon-based approaches (VADER, TextBlob) offer quick solutions, LLMs like BERT provide superior Accuracy. Fine-tuning an LLM can improve results further but at a computational cost.

Leveraging the right sentiment detection approach for your use case can help you build more innovative, emotionally aware AI systems.

🚀 Start experimenting with sentiment detection today!