Python and True Multithreading Understanding Python's Multithreading Capabilities

Introduction

Python is often considered slow, but it does support multithreading and multitasking. Understanding how it achieves concurrency is essential to effectively leveraging its capabilities.

Key Points About Python Threads:

- Python supports threads through the threading module.

- The Global Interpreter Lock (GIL) affects how Python executes threads by limiting the accurate parallel execution of Python bytecode.

- Python can request additional threads from the OS.

- Some C extensions release the GIL, allowing accurate parallel execution.

Multithreading vs. Multiprocessing

- Multithreading in Python is helpful for I/O-bound tasks, such as network requests and file I/O.

- Multiprocessing is better suited for CPU-bound tasks, as it spawns separate processes, bypassing the GIL and utilizing multiple CPU cores.

- Some libraries, like NumPy and OpenCV, release the GIL, allowing for parallel execution in threads.

Demonstrating Parallel Execution Across 32 Threads

To demonstrate how Python can effectively utilize multiple threads, we use a library that releases the GIL, such as NumPy, or leverages concurrent futures.ThreadPoolExecutor.

Here's a testable Python script that:

- Performs a CPU-bound task (NumPy matrix multiplication) in 32 threads.

- Verifies parallel execution by measuring execution time.

#!/usr/bin/env python

import concurrent.futures

import numpy as np

import time

import os

# Number of threads

NUM_THREADS = 32

MATRIX_SIZE = 500 # Adjust size for better benchmarking

# Function to perform a heavy computation

def matrix_multiply(_):

A = np.random.rand(MATRIX_SIZE, MATRIX_SIZE)

B = np.random.rand(MATRIX_SIZE, MATRIX_SIZE)

return np.dot(A, B) # NumPy releases the GIL during execution

def main():

print(f"Running on {os.cpu_count()} cores...")

# Single-threaded execution for baseline

start = time.time()

for _ in range(NUM_THREADS):

matrix_multiply(None)

single_thread_time = time.time() - start

print(f"Single-threaded execution time: {single_thread_time:.2f} seconds")

# Multi-threaded execution

start = time.time()

with concurrent.futures.ThreadPoolExecutor(max_workers=NUM_THREADS) as executor:

results = list(executor.map(matrix_multiply, range(NUM_THREADS)))

multi_thread_time = time.time() - start

print(f"Multi-threaded execution time: {multi_thread_time:.2f} seconds")

# Validate results

assert len(results) == NUM_THREADS # Ensures all tasks completed

print("Parallel execution validated!")

if __name__ == "__main__":

main()How This Works:

- Uses ThreadPoolExecutor with 32 threads.

- Runs NumPy matrix multiplication, which releases the GIL.

- Measures execution time for both single-threaded and multi-threaded runs.

- Validates that all 32 tasks are completed.

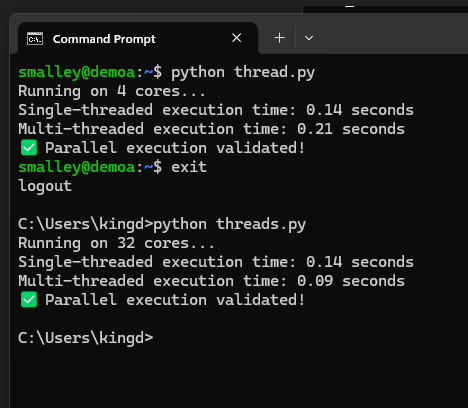

The code was first run in WSL2 (Python 3.10.12) and then Native Windows 11 Pro cmd and (Python 3.12)

Expected Results:

- The multi-threaded version should be significantly faster if the threads execute in parallel.

- If GIL blocked execution, both runs would take roughly the same time.

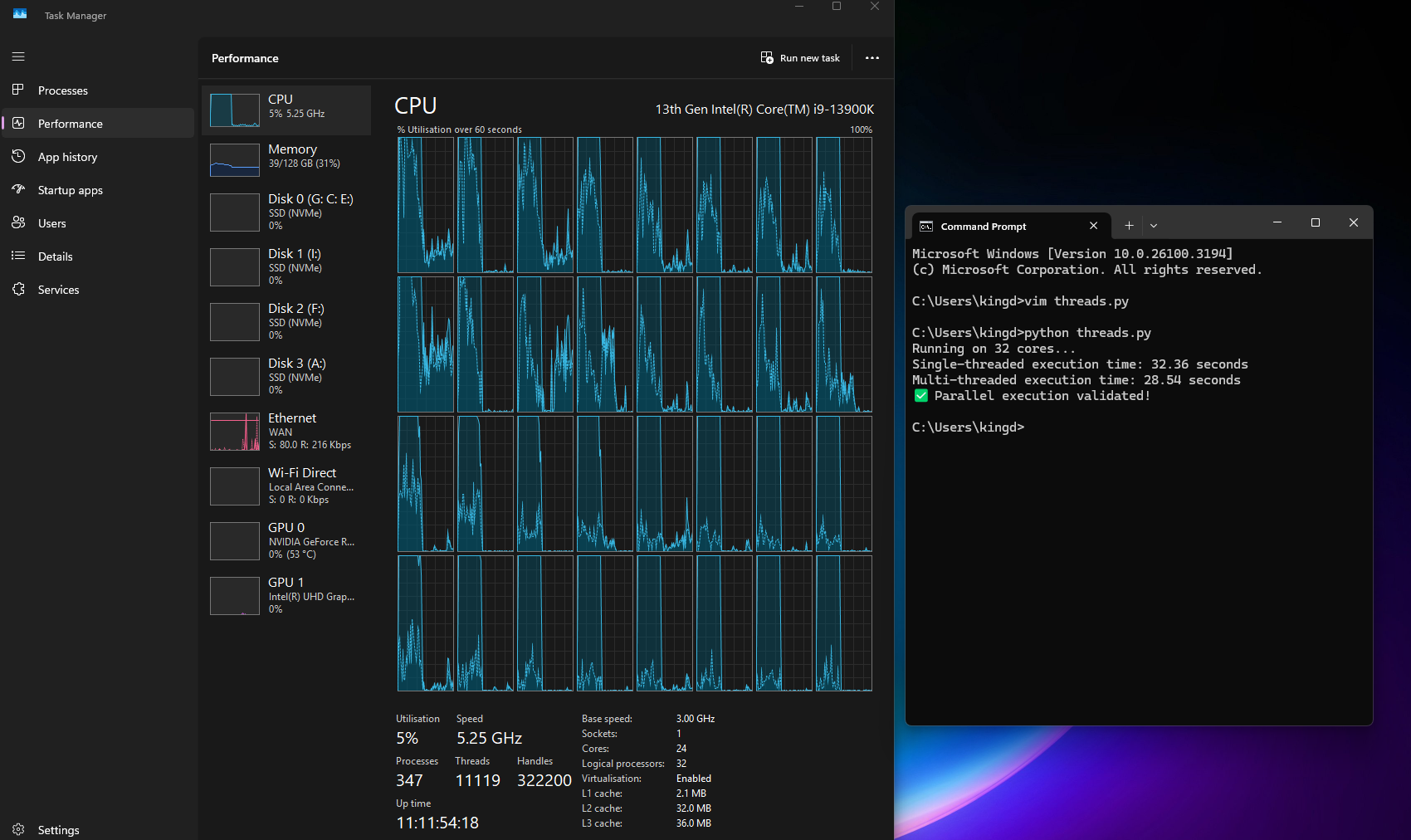

With MATRIX_SIZE = 5000 We get these results using all 32 threads on an I9-13900k and 128GB DDR5. It sure appears to be using all cores! the ram usage rose from 39GB to 96GB memory usage during this time, I tried 50,000 however after around 20 minutes I reduced that to 5000, the results below.

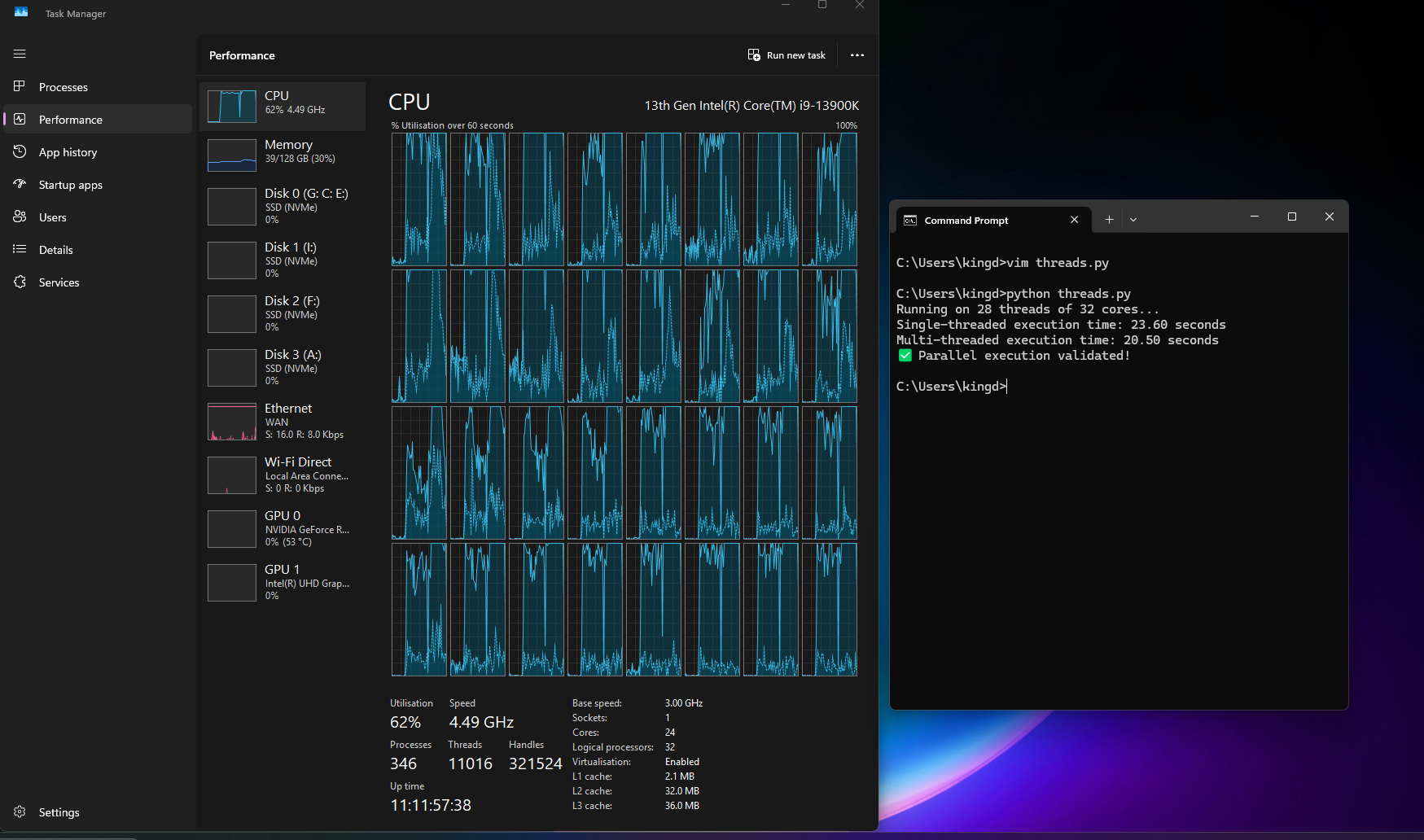

Next I changed threads to use 28, the actual core count of an I9-13900K leaving MATRIX_SIZE at 5000 previously set and we see CPU saturation yet again.

This demonstrates that Python can leverage accurate threading in specific scenarios, mainly when working with C-extensions that release the GIL. Understanding these nuances allows developers to optimize performance effectively.